Professional network data

Leverage our top B2B datasets

Job posting data

Get access to hundreds of millions of jobs

Employee review data

Get data for employee sentiment analysis

Clean dataNEW

Enhanced professional network data

Employee data

Get data on global talent at scale

Funding data

Discover and analyze funding deals

Firmographic data

Unlock a 360° view of millions of companies

Technographic data

Analyze companies’ tech stacks

BY INDUSTRY

MOST POPULAR USE CASES

Investment

Leveraging web data for informed investing

HR tech

Building or enhancing data-driven HR tech

Sales

Supercharging your lead generation engine

Marketing

Transforming marketing with web data

Market research

Conducting comprehensive market research

Lead enrichment

Use Coresignal’s data for enrichment

Talent analytics

Analyze talent from multiple perspectives

Talent sourcing

Comprehensive talent data for recruitment

Investment analysis

Source deals, evaluate risk and much more

Target market analysis

Build a complete view of the market

Competitive analysis

Identify and analyze competitors

B2B Intent data

Lesser-known ways to find intent signals

BY INDUSTRY

MOST POPULAR USE CASES

Investment

Leveraging web data for informed investing

HR tech

Building or enhancing data-driven HR tech

Sales

Supercharging your lead generation engine

Marketing

Transforming marketing with web data

Market research

Conducting comprehensive market research

Susanne Morris

October 30, 2020

Since the introduction of big data into our modern business models, the need for data extraction, analysis, and processing has become increasingly essential to companies across all industries. As the collection of data increases, so does the need to read and understand it.

Similarly, natural languages require translation to effectively communicate interpersonally, computer and programming languages also require such processes. This is where data parsing comes in. In its simplest form, parsing data transforms unstructured and sometimes unreadable data into structured and easily readable data.

Whether you work within the development team of a company or take on customer-facing responsibilities such as marketing roles, understanding data and how it is transformed is essential for long-term business success.

This article will explain data parsing on a deeper level, break down the structure of a parser, and compare a buy or build a solution for your company’s data analysis needs. Additionally, this article will discuss data parsing business use cases, including workflow optimization, web scraping, and investment analysis.

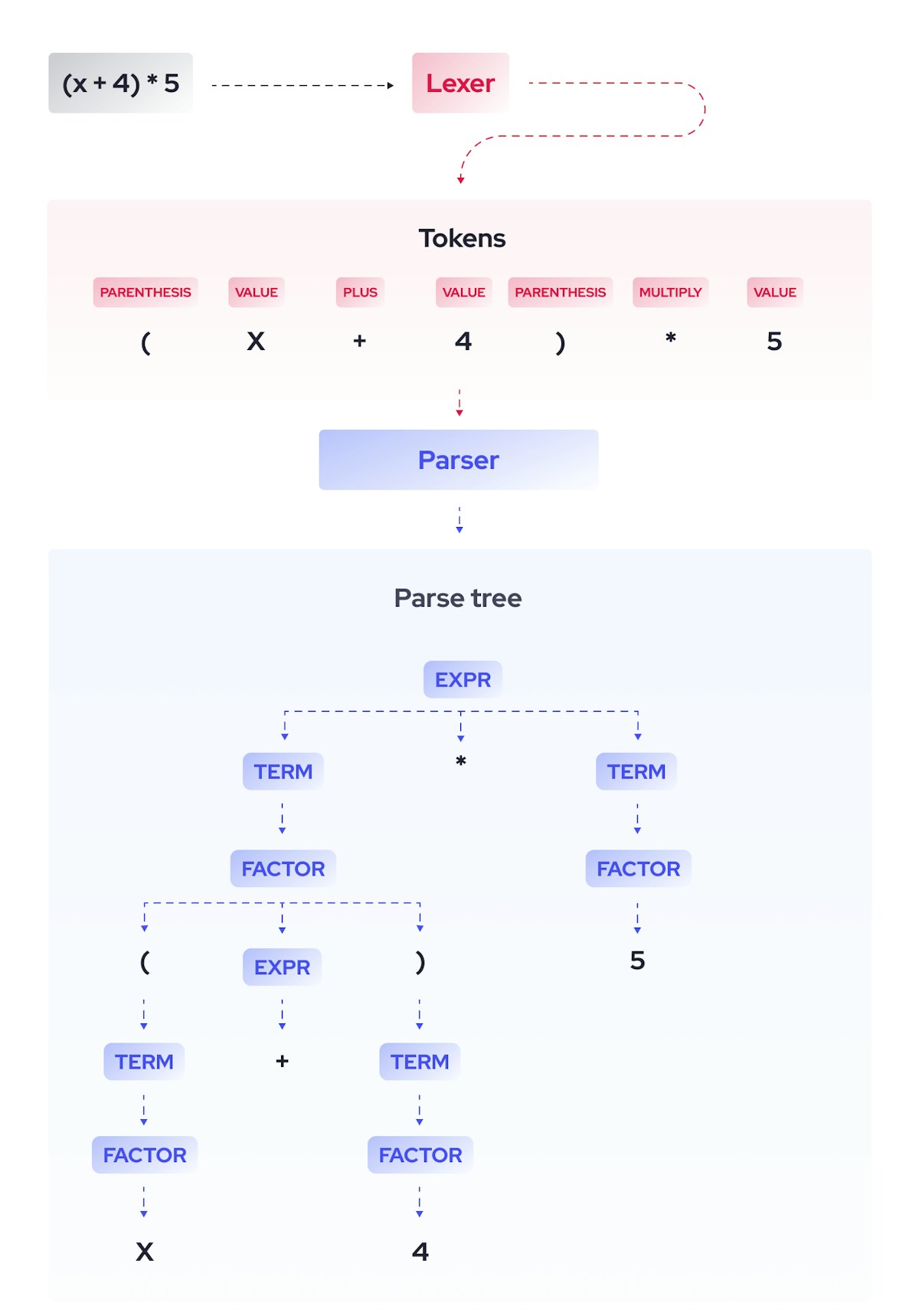

The structure of a parser

Generally speaking, parsing, or syntax analysis is the process of analyzing a string of symbols in a language, conforming to the rules of formal grammar. Parsing in terms of data analysis extends this definition into a two-step process, in which the parser is programmatically instructed on which data to read, analyze or transform. The result usually is a more structured format.

The two components that make up a data parser are known as lexical analysis and syntactic analysis. Some parsers also offer a semantic analysis component, which takes the remaining parsed and structured data and applies meaning. For instance, a semantic analysis may filter the data further such as: positive or negative, complete or incomplete, etc. Semantic analysis may further enhance the data analysis process, but this is not always the case.

It’s important to note that while some parsers yield helpful insights, semantic analysis is not inherently built into the structure of most parsers, due to the favored practice of human semantic analysis. This step should be considered an additional step, should you choose it, as it may complement your business goals.

Data parsing has two main steps. Together these steps transform a string of unstructured data into a tree of data, whose rules and syntax are built into the tree’s structure. Let’s explore both steps.

Lexical analysis

The first step of data parsing occurs during lexical analysis. Lexical analysis, in its simplest form, creates tokens, with a lexer, from a sequence of characters that enter the parser as a string of raw unstructured data. Oftentimes this string of data enters the parser in an HTML format. The parser creates tokens by utilizing lexical units (keywords, identifiers, and delimiters) while simultaneously ignoring lexically irrelevant information (such as white spaces and comments).

The parser then discards any irrelevant tokens, established during the lexical process. The remainder of the parsing process falls into the category of syntactic analysis.

Syntactic analysis

The syntactic analysis component of data parsing consists of parse tree building. What does this mean? A parser takes the aforementioned tokens and arranges them into a parse tree, in which any irrelevant tokens are captured in the nesting structure of the tree itself. Irrelevant tokens include elements such as parenthesis, curly braces, and semicolons.

To further illustrate this concept, let’s look at a common mathematical example such as (x + 4) * 5. The diagram below illustrates the general process of data parsing in the form of a lexer and a parser.

In the case of understanding this illustration in terms of real-life applications, a data parser would apply this same logic to more complex data. In its simplest form, a data parser would create tokens from data in an HTML document and transform said tokens into a parse tree.

Parsing technologies

So what technologies and languages can parsing methods be used with? Due to the extremely flexible nature of data parsers, they can be used in conjunction with many technologies. Some of these technologies include:

Scripting languages

- Scripting languages create a series of commands that can be executed without the need for compiling.

- These languages are seen in web applications, games, and multimedia, as well as plugins and extensions.

HTML and XML

- Also known as Hypertext Markup Language, HTML is used to create web pages and web page applications that display data.

- Similarly, XML (eXtensible Markup Language) is used for the transportation of data within web pages and web applications.

Interactive data language

- These languages are used for interactive processing of large amounts of data, including interactive processing.

- Its application is accepted widely in space sciences and solar physics.

Modeling languages

- Are used to specify system requirements, structures, and behaviors.

- These languages are used by interested parties (developers, analysts, investors) to understand the workings of the system being modeled.

SQL and database languages

- Structured Query Language, or SQL, is a programming language used for managing data in database systems.

HTTPS and Internet Protocols

- Hypertext Transfer Protocol and other Internet Protocol languages are used as a communication protocol and are the foundation of data communication for the world wide web.

Data parsing use cases

Web scraping

Before beginning the data parsing process, companies must acquire substantial-quality data. Data extraction in the form of web scraping is an essential prerequisite to the data scraping process. During the web scraping process, a scraper retrieves an unparsed HTML document (see above for uses) with extraneous information, such as list tags. In this case, a parser would transform the data taken from a web scraper and remove such tags, in addition to performing other basic tasks previously mentioned (token creation and syntactic analysis).

Workflow optimization

Workflow optimization is built into the basic function of data parsers. By transforming unstructured data into data that is more readable, companies can improve their workflow.

Some teams that may see a substantial increase in productivity include data analysts, programmers, marketers, and investors.

Investment analysis

Acquiring data for investment efforts such as equity research, evaluating start-ups, earnings forecasts, and competitive analysis is a time-consuming effort. Likewise, data analysis requires access to substantial data processing resources. One may shortcut these resources by utilizing web scraping tools in conjunction with a data parser. This will optimize workflow, and will consequently allow you to direct resources elsewhere or focus them on a more in-depth data analysis.

More specifically, investors and data analysts can harness data parsing in a way that provides better insights for business decisions. Investors, hedge funds, and other professionals that evaluate start-ups, predict earnings, and even monitor social sentiment rely on web-scraping followed by robust data parsing for up-to-date market insights.

In-house or outsource?

There are many reasons to build or buy a data parser. Let’s take a closer look at each option.

In-house pros

There are many benefits to building your own parser. Firstly, building your own parser lends you more control over the specifications of your data parser. As mentioned previously, it’s important to remember data parsers aren’t restricted to a particular data format. Instead, parsers convert one data format into another. Further, how the data is converted is dependent upon how the parser was built. For this reason, in-house parsers are beneficial due to their customizable nature.

In-house cons

Similarly, sourcing your data parser internally will allow you complete control over maintenance and updates. This method also has the potential to be more cost-efficient. However, there are a few cons to building your parser. An in-house parser will require a server strong enough for your parsing needs. Additionally, because you will have complete control over your parser, maintaining, updating, and testing your parser may consume valuable time and resources.

Outsourcing pros

Purchasing a parser can be beneficial in many ways. Primarily, because the parser would be built by a company that specializes in data extraction and parsing, you will be less likely to run into any problems. Additionally, you will have more time and resources available, as you won’t need to invest in a parser team or spend any time maintaining your parser.

Outsourcing cons

Some problems that may arise with outsourcing your parser may include cost and customizability. Because data parsing providers offer a cookie-cutter solution, you will likely miss out on ways to customize your parser to your exact business needs.

Coresignal and data parsing

Coresignal offers robust raw data that will help you achieve maximum insights. Specifically, Coresignal parses data scraped from the web and packages it into a JSON (JavaScript Object Notation) format. JSON data formats are beneficial in that they clean data from unnecessary HTML tags. Coresignal’s raw data packages are not semantically analyzed, providing you with maximum potential for insights and analysis.

Ultimately, if your company handles data in any capacity, understanding data parsing will help you choose the best data parser for your business needs.

Frequently asked questions

What is the structure of a data parser?

The two components that make up a data parser are lexical analysis and syntactic analysis. These steps transform a string of unstructured data into a tree of data, whose rules and syntax are built into the tree’s structure.

What are data parsing technologies?

Some of the most common data parsing technologies include HTML, XML, scripting languages, modeling languages, SQL, and database languages.

Why is data parsing important?

Data parsing is important because it allows companies to better utilize public web data for decision-making and discovering business opportunities.

Don’t miss a thing

Subscribe to our monthly newsletter to learn how you can grow your business with public web data.

By providing your email address you agree to receive newsletters from Coresignal. For more information about your data processing, please take a look at our Privacy Policy.

Related articles

Sales & Marketing

It’s a (Data) Match! Data Matching as a Business Value

With the amount of business data growing, more and more options to categorize it appear, resulting in many datasets....

Mindaugas Jancis

April 09, 2024

Data Analysis

Growing demand for sustainability professionals 2020 - 2023

Original research about the changes in demand for sustainability specialists throughout 2020-2023....

Coresignal

March 29, 2024

Data Analysis

Refined Data as a High-Value, Low-Maintenance Option

Raw data refinement is a vital step before delving into the analysis. But should all companies do this by...

Coresignal

March 28, 2024