Professional network data

Leverage our top B2B datasets

Job posting data

Get access to hundreds of millions of jobs

Employee review data

Get data for employee sentiment analysis

Clean dataNEW

Enhanced professional network data

Employee data

Get data on global talent at scale

Funding data

Discover and analyze funding deals

Firmographic data

Unlock a 360° view of millions of companies

Technographic data

Analyze companies’ tech stacks

BY INDUSTRY

MOST POPULAR USE CASES

Investment

Leveraging web data for informed investing

HR tech

Building or enhancing data-driven HR tech

Sales

Supercharging your lead generation engine

Marketing

Transforming marketing with web data

Market research

Conducting comprehensive market research

Lead enrichment

Use Coresignal’s data for enrichment

Talent analytics

Analyze talent from multiple perspectives

Talent sourcing

Comprehensive talent data for recruitment

Investment analysis

Source deals, evaluate risk and much more

Target market analysis

Build a complete view of the market

Competitive analysis

Identify and analyze competitors

B2B Intent data

Lesser-known ways to find intent signals

BY INDUSTRY

MOST POPULAR USE CASES

Investment

Leveraging web data for informed investing

HR tech

Building or enhancing data-driven HR tech

Sales

Supercharging your lead generation engine

Marketing

Transforming marketing with web data

Market research

Conducting comprehensive market research

Lukas Racickas

January 11, 2023

In general, data quality refers to the usefulness of a specific dataset towards a certain goal. Data quality can be measured in terms of accuracy, completeness, reliability, legitimacy, uniqueness, relevance, and availability.

Whether you work in development, sales, management, or acquisition, utilizing quality data is essential for day-to-day business operations. However, because data is so abundant and comes in many forms (quantitative, qualitative) and formats (JSON, CSV, XML), obtaining and maintaining quality data can be challenging. This challenge is where understanding data quality comes in handy.

Due to its vast usage, data quality has many definitions and can be improved in various ways. In this article, I will explore the ways in which data quality is defined, use cases for data quality, as well as six methods to improve your company’s overall quality of data.

What does data quality mean?

Depending on who you ask, data quality has many definitions. Data quality definition can be applied to three main groups of people: consumers, business professionals, and data scientists.

While the definitions change depending on their intended use, the core meaning of data quality stays relatively the same. I will touch on these core principles later in the article.

For now, let’s take a look at the three most common definitions of data quality.

Data quality for consumers

Consumers understand data quality as data that fits its intended use, is kept secure, and explicitly meets consumer expectations.

Data quality for business professionals

Business experts understand data quality as data that aids in daily operations and decision-making processes. More specifically, high data quality will enhance workflow, provide insights, and meet a business’s practical needs.

Data quality for data scientists

Data scientists understand data quality on a more technical level, as data that fulfills its inherent characteristics of accuracy, usefulness, and satisfies its intended purpose.

Ultimately, these definitions of data quality are all united by their emphasis on purpose and accuracy. While these are important, many other dimensions can be used to measure data quality. Let’s first examine why data quality is important, and some common use cases.

Data integrity

Data integrity is the process that makes the data accessible and valuable to the entire organization. Data quality is only a part of the whole data integrity process. There are three main elements of data integrity.

Data integration

First of all, data from various sources has to be collected and integrated into one single dataset. The main purpose of it is to make the data available and accessible to the entire business.

Data quality

Secondly, high-quality data is extremely important. Poor data quality results in inconsistencies and inaccuracies, rendering it utterly useless.

Effective data quality management is imperative to keep data assets useful and insightful.

Data enrichment

Finally, once you have high-quality data in check, you can opt to enrich it. Filling in the missing gaps is a great way to improve data completeness and data accuracy.

Why is data quality important?

There are many risks involved in low data quality. Forbes cites that low data quality can negatively affect businesses' revenue, lead generation, consumer sentiment, and internal company health. Virtually, maintaining high data quality affects every aspect of a company’s workflow, varying from business intelligence and product/service management to consumer relations and security.

Now let’s take a closer look at the major use cases for data quality.

Use cases for data quality

Data standardization

Similar to data governance, data standardization involves organizing and inputting data according to negotiated standards established by local and international agencies.

Unlike data governance, which examines data quality management from a more macro and legal perspective, data standardization considers dataset quality on a micro level, including implementing company-wide data standards. This allows for further specification and accuracy in complex datasets.

Data cleansing

Data cleansing, with regards to quality, is a process in which data wrangling tools correct corrupt data, remove duplicates, and resolve empty data entries.

Ultimately, this process aims to delete data that is considered “dirty” and replace it with sound, clear, and accurate data.

Geocoding

Geocoding is the process of correcting personal data such as names and addresses to conform to international geographic standards.

Personal data that does not conform to geographical standards can create negative consumer interactions and miscommunications.

Data governance

Data governance creates standards and definitions for data quality, aiding in maintaining high data quality across teams, industries, and countries.

The rules and regulations that establish data governance originate from legislative processes, legal findings, and data governance organizations such as DAMA and DGPO.

Data profiling

Data profiling is a process that examines and analyzes data from a particular dataset or database in order to create a larger picture (profile summary) for a particular entry, user, data type, etc.

Data profiling is used for risk management, assuring data quality, and analyzing metadata (that sometimes goes overlooked).

Measuring data quality

As I mentioned previously, measuring data quality can be an incredible feat since many dimensions are used to measure a dataset’s quality. Here are the primary dimensions used for data quality measures.

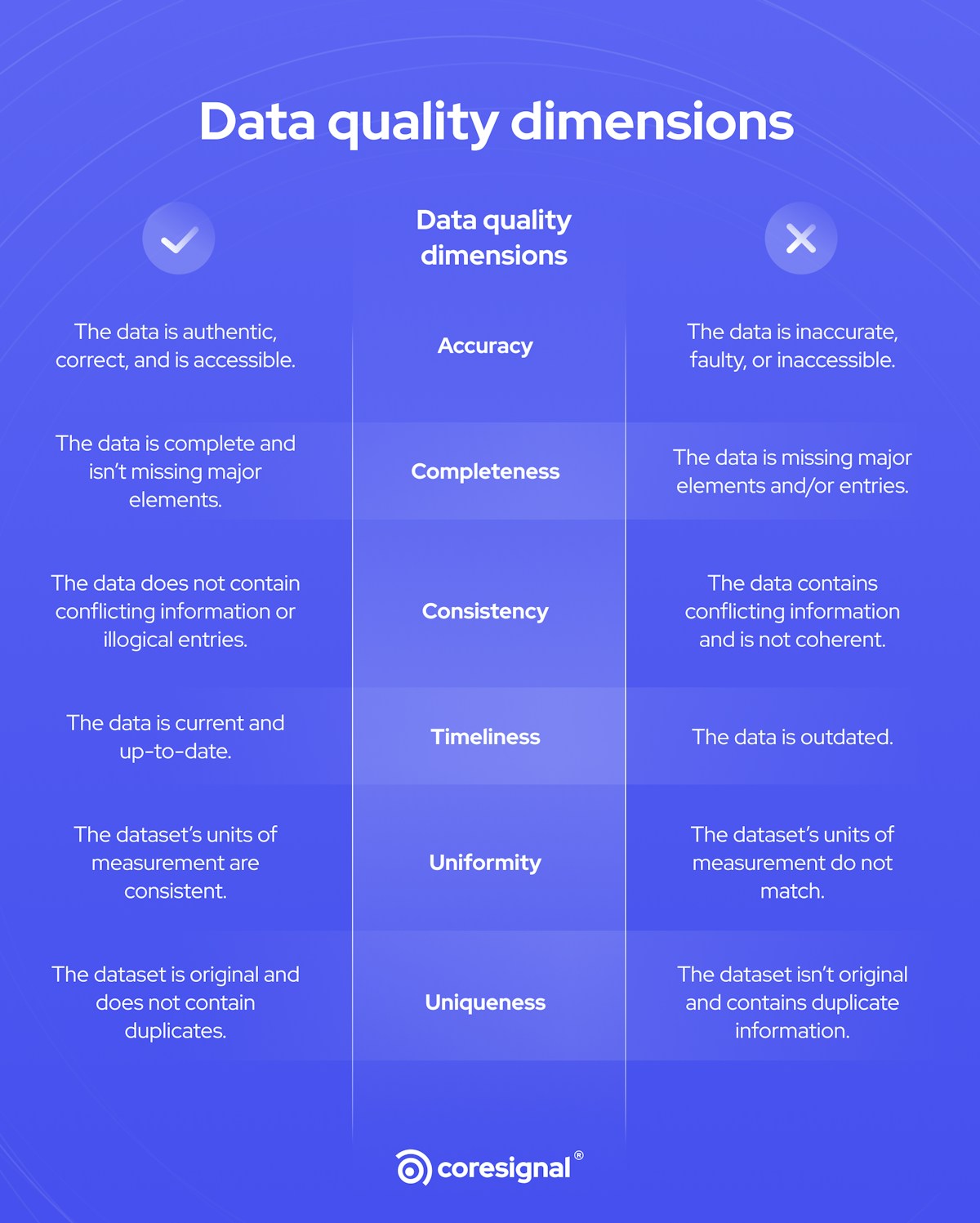

Data quality dimensions

There are six dimensions of data quality: accuracy, completeness, consistency, timeliness, uniformity, and uniqueness. All of them are listed and discussed in more detail below.

Data accuracy

Data accuracy refers to the measurement of data authenticity as defined by any restrictions the data collection tool has set in place.

For instance, inaccurate data can occur when someone reports data that is either false or an error occurred during the input process.

Data completeness

Completeness is a measurement of the degree of known values for a particular data collection process. Incomplete data contains missing values throughout a particular dataset.

Missing data can skew data analysis results and can cause inflated results or may even render a particular dataset useless if there is severe incompleteness.

Data consistency

Data consistency refers to the measure of coherence and uniformity of data across multiple systems. Significantly, inconsistent data will contradict itself throughout your datasets and may cause confusion about which data points contain errors.

Additionally, inconsistent data can occur when data is input by different users across different data entry systems.

Timeliness

Timeliness refers to the rate at which data is updated. Timely data is updated often and does not contain outdated entries that may no longer be accurate.

Data uniformity

Data uniformity is a measurement of the consistency of the units of measurements used to record data. Data that is not uniform will have entries with different measurement units, such as Celsius versus Fahrenheit, centimeters to inches, etc.

Data uniqueness

Data uniqueness is a measurement of originality within a dataset. Specifically, uniqueness aims to account for duplicates within a dataset.

Uniqueness is typically measured on a percentage-based scale, where 100% data uniqueness signifies there are no duplicates in a dataset.

6 methods to help you improve your data quality

As previously mentioned, data is an essential component of business intelligence. Likewise, compiling enough data for your datasets is just as crucial as collecting quality data.

Evaluating datasets is a difficult but necessary task that can set you apart from your competitors. Data quality issues can occur in many instances during a datapoint’s life cycle.

Nevertheless, creating clear guidelines and setting thoughtful intentions when analyzing your data will increase your data quality, allowing a more precise understanding of what your data is telling you.

With that, let’s look at methods for improving data quality.

1. Collect unique data

Uniqueness refers to the specific type of data you are collecting. It is important to utilize data that is specific to your business objectives and matches the intentions behind your data usage.

For example, maybe your company wants to monitor its competitors. Simply put, you should ask yourself: “Is this data relevant to my company’s established business goals?” If not, you may want to reassess what specific data you are collecting.

2. Collect it frequently

Frequency surrounding data collection, also known as timeliness (discussed above), indicates how fresh your dataset’s information is. Evaluating the frequency at which you collect new data or update the current information in your dataset will directly affect its quality.

The goal here is to establish recurring data collection cycles that support your business objectives.

3. Collect accurate data

While sometimes accurate data is not possible, due to the data collection process’s human component, creating parameters on data intake reduces inaccuracies. For instance, analyzing datasets manually at random will give you insights into how accurately consumers are inputting data.

At first glance, some datasets may look complete, but that doesn’t necessarily equate to accuracy.

4. Reduce noise in data

Noisy data can create unnecessary complexities and convoluted datasets.

For instance, there may be duplicates in your data or misspellings in entries that cause errors in the data analysis process. Reducing noise in data can be done by data matching, which compares individual data points with one another to find duplicates, misspellings, and excessive data (data that is not necessarily a duplicate but is implied in other data entry points).

Data cleansing, with regards to quality, is a process in which data wrangling tools correct corrupt data, remove duplicates, and resolve empty data entries.

5. Identify empty values in your data

Incomplete or missing data can negatively affect the quality of your datasets, and in some cases create more extensive data reading errors depending on which data profiling tools you are using. The larger the number of empty values the more inaccurate your data becomes.

Therefore, ensuring completeness throughout the data collection process is essential to guaranteeing data quality.

6. Invest in data quality best practices

According to Experian, human data entry errors account for 59% of reported inaccuracies. Similar to implementing best practices in the workplace, it is also critical to have best practices for your dataset collection process.

Communicating and interpreting your datasets consistently throughout your company will increase the quality in which your business utilizes said data. Establishing company-wide best practices for your dataset process will ensure consistency and quality.

Wrapping up

Data quality affects all facets of business operations. Poor-quality data results in inefficient operations and inaccurate insights. In other words, it could hurt your business instead of helping it.

Data quality management tools must be in place to sustain high-quality data. Customer data is exceptionally important. It changes constantly and you should be the first to know about any changes in customer data in order to be able to deliver relevant and appropriate pitches. Data quality tools will help you keep the data valid and useful. Consistent data management is key to successful data-driven business strategies.

Once you master data management, you will be able to reap the benefits that high-quality data offers. With this in mind, obtaining and maintaining quality data is a priority in a successful business.

Don’t miss a thing

Subscribe to our monthly newsletter to learn how you can grow your business with public web data.

By providing your email address you agree to receive newsletters from Coresignal. For more information about your data processing, please take a look at our Privacy Policy.

Related articles

Sales & Marketing

10 Most Reliable B2C and B2B Lead Generation Databases

Not all lead databases are created equal. Some are better than others, and knowing how to pick the right one is key. A superior...

Mindaugas Jancis

April 23, 2024

Sales & Marketing

It’s a (Data) Match! Data Matching as a Business Value

With the amount of business data growing, more and more options to categorize it appear, resulting in many datasets....

Mindaugas Jancis

April 9, 2024

Data Analysis

Growing demand for sustainability professionals 2020 - 2023

Original research about the changes in demand for sustainability specialists throughout 2020-2023....

Coresignal

March 29, 2024